1. Introduction

One of the most contentious applications of artificial intelligence is automated grading, which is drastically changing lecture halls and classrooms. A crucial query at the heart of this debate is whether or not AI grading can be made equitable. Researchers and educators assess the potential benefits and drawbacks of these tools as universities test them out.

2. What Is AI Grading?

The use of artificial intelligence (AI) systems to assess student projects, tests, and assignments is known as AI grading. Algorithms evaluate responses and allocate scores rather than depending entirely on human teachers. Large classes with excessive grading requirements are where these systems are most frequently used.

Fundamentally, AI grading works by using algorithms that mimic or enhance human assessment. Natural language processing (NLP) tools, for instance, can analyze a student’s essay by dissecting its grammar, structure, vocabulary, and argumentation. In a similar vein, multiple-choice tests can be accurately scored instantly, and coding assignments are evaluated in relation to efficiency standards and expected results.

AI grading is being used by higher education institutions to handle a variety of tasks. Universities are investigating machine learning systems to evaluate essays or lab reports, while online learning platforms frequently use AI to grade quizzes and short answers. Faster turnaround, consistency, and fairness are the goals of these platforms.

However, whether AI grading tools can accurately and impartially capture the breadth of student learning and creativity is at the center of the controversy. Determining whether fairness can actually be attained in academic settings requires an understanding of the workings of AI’s evaluation system.

3. Why Use AI in Grading?

The urgent issues facing contemporary education are the driving force behind the push for AI in grading. For starters, it saves teachers a great deal of time. It is not feasible for instructors to give thorough and timely feedback on every assignment while overseeing hundreds of students. However, AI can produce results in a matter of seconds.

Second, AI systems encourage standardization and efficiency. The degree of strictness or leniency displayed by human graders can vary, frequently based on variables such as mood or weariness. The same guidelines are applied uniformly to all submissions by automated grading tools.

Many institutions are optimistic that AI grading fairness could surpass traditional grading because of its perceived neutrality.

Third, AI may lessen unintentional biases in people. For instance, handwriting, accents, or even a student’s reputation may unintentionally affect a teacher. A well-trained AI system prioritizes content over outside influences.

Lastly, the digital transformation of higher education is consistent with AI grading. Massive open online courses (MOOCs), hybrid programs, and online courses all call for scalable solutions that traditional grading cannot provide. Automated grading gives educational institutions a way to meet these increasing demands.

Even with these benefits, there are still concerns: Does quicker grading equate to more equitable grading? Is it possible for machines to accurately assess complex reasoning or creativity? These inquiries take us to the more intricate workings of AI grading.

4. How Does AI Grading Work?

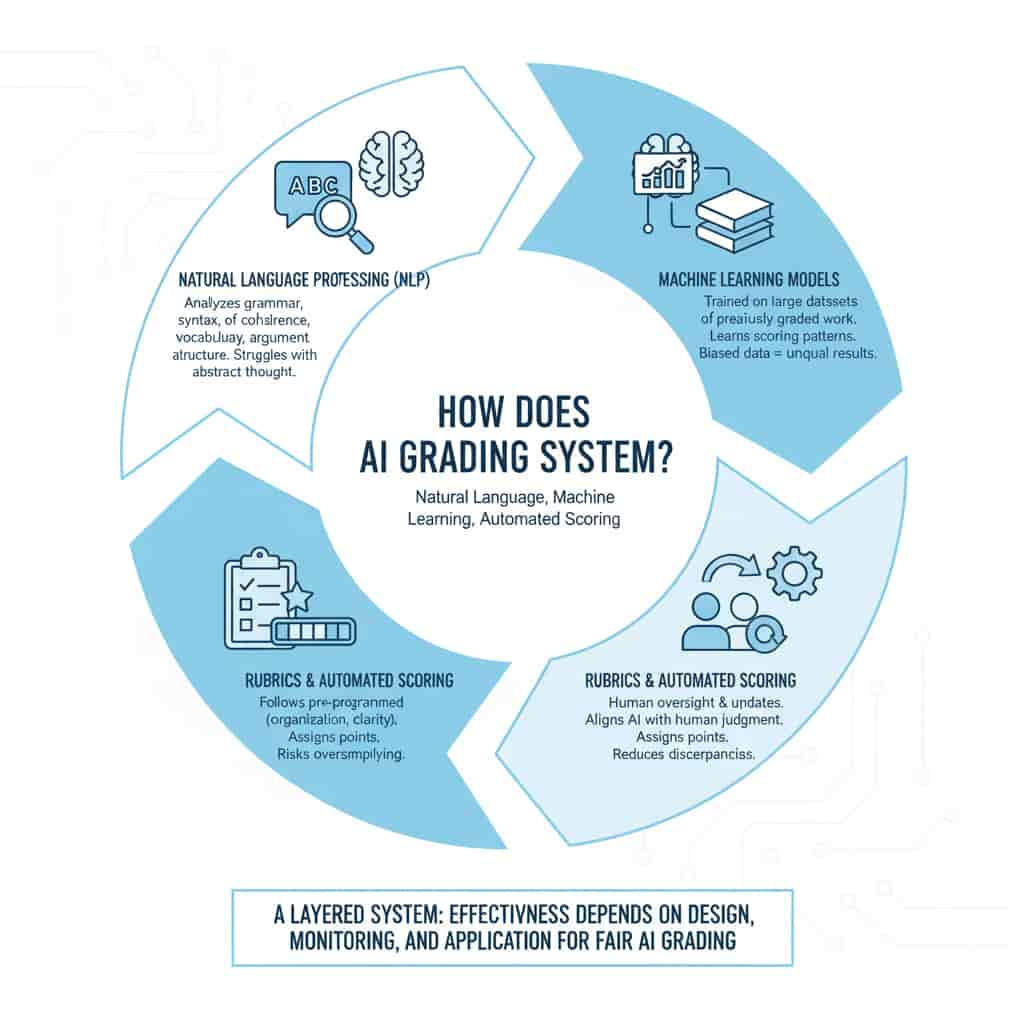

AI grading systems function through a combination of natural language processing, machine learning, and automated scoring frameworks. Each component plays a role in shaping the outcomes—and finally influencing AI grading fairness.

1. Natural Language Processing (NLP).

For essays, NLP breaks down text into mensurable components. It analyzes grammar, syntax, coherence, vocabulary use, and argument structure. Some systems even attempt to gauge creativity or originality through semantic logical thinking. However, NLP often struggles with abstract thought or cultural nuance.

2. Machine Learning Models.

AI grading tools are trained on large datasets of previously graded work. The algorithm “learns” what scores are typically appointed to certain features of writing or problem-solving. The more diverse and balanced the training data, the greater the chance of achieving fair results. Yet, biased or limited training sets can perpetuate inequality.

3. Rubrics and Automated Scoring.

Many AI class follow pre-programmed rubrics aligned with course goals. These rubrics allow the system to assign points based on observable criteria, such as organisation, lucidity, or use of evidence. While efficient, they risk oversimplifying learning outcomes by focusing on measurable, surface-level features.

4. Continuous Feedback Loops.

More sophisticated platforms include ongoing model updates and human oversight. By minimizing disparities that could jeopardize the fairness of AI grading, this hybrid approach guarantees that the AI stays in line with human judgment.

In reality, AI grading is a multi-layered system of algorithms rather than a single technology. How well these systems are developed, overseen, and implemented in particular educational contexts has a significant impact on their efficacy.

5. Benefits of AI Grading

The adoption of AI grading systems brings several significant benefits, many of which directly support fairness and equity in education.

1. Consistency Across Submissions.

Human graders may consciously apply different standards depending on the day or student identity. AI, however, applies rules uniformly. This body strengthens the case for AI grading fairness, particularly in large classes.

2. Speed and Scalability.

AI can process thousands of assignments in minutes. This rapid turnabout gives scholar faster feedback, allowing them to improve more quickly. In contrast, postponed grading can hinder learning progress.

3. Supporting Overburdened Teachers.

Educators often face heavy grading loads. By automating routine appraise, AI frees them to focus on mentoring, programme design, and personalized student support—areas where human expertise is irreplaceable.

4. Potential to Reduce Human Bias.

While no system is free from defect, AI can minimize certain biases. For example, it does not consider handwriting, student reputation, or cultural stereotypes. Properly trained models can help level the playing field for divers student populations.

5. Adaptability to Large-Scale Assessments.

Human grading is not feasible in large-scale open online courses or nationwide testing situations. AI provides scalable solutions without sacrificing consistency or turnaround time.

These advantages help to explain why educational institutions are still experimenting with automated grading systems. Every benefit, though, has limitations. Every strength has drawbacks that raise doubts about the fairness of AI grading in general.

6. Challenges and Concerns with AI Grading

Despite its advantages, AI ordering faces a host of situation that complicate the speech act of fairness.

1. Algorithmic Bias and Training Data.

AI is only as fair as the data it learns from. If past grading data reflects bias, whether based on language, taste context, or institutional preferences, the AI will inherit and produce those same biases. This directly undermines AI grading fairness.

2. Lack of Transparency.

AI grading often mathematical function as a “black box,” meaning educators and pupil cannot easily understand how scores are determined. Without transparence, it’s difficult to trust that results are fair or accurate.

3. Overemphasis on Measurable Metrics.

AI tends to reward grammar, structure, and formulaic writing while struggling to evaluate creativity, critical thinking, or originality. As a result, students who think outside the containerful may be penalized, rising serious concerns about fairness in assessing higher-order skills.

4. Student Trust and Acceptance.

For students, grades represent more than numbers—they determinative motivation, self-confidence, and future chance. If students feel AI grading is unfair, their trust in the entire educational system may erode. Trust is central to AI grading equity, and skepticism remains widespread.

5. Ethical and Legal Considerations.

Educational institutions must navigate privateness concerns, accountability for errors, and compliance with academic integrity policies. If an AI system wrongly penalizes a educatee, who takes responsibility—the institution, the software developer, or the instructor?

6. Accessibility and Equity.

AI’s failure to recognize non-standard expressions or alternative approaches could disproportionately impact students from diverse linguistic and cultural backgrounds. This calls into question whether AI grading fairness is applicable everywhere.

In conclusion, worries about bias, transparency, and ethical responsibility frequently outweigh the speed and consistency that AI offers. The question of whether AI grading can ever be genuinely fair is still open unless these issues are resolved.

7. Is AI Grading Truly Fair and Unbiased?

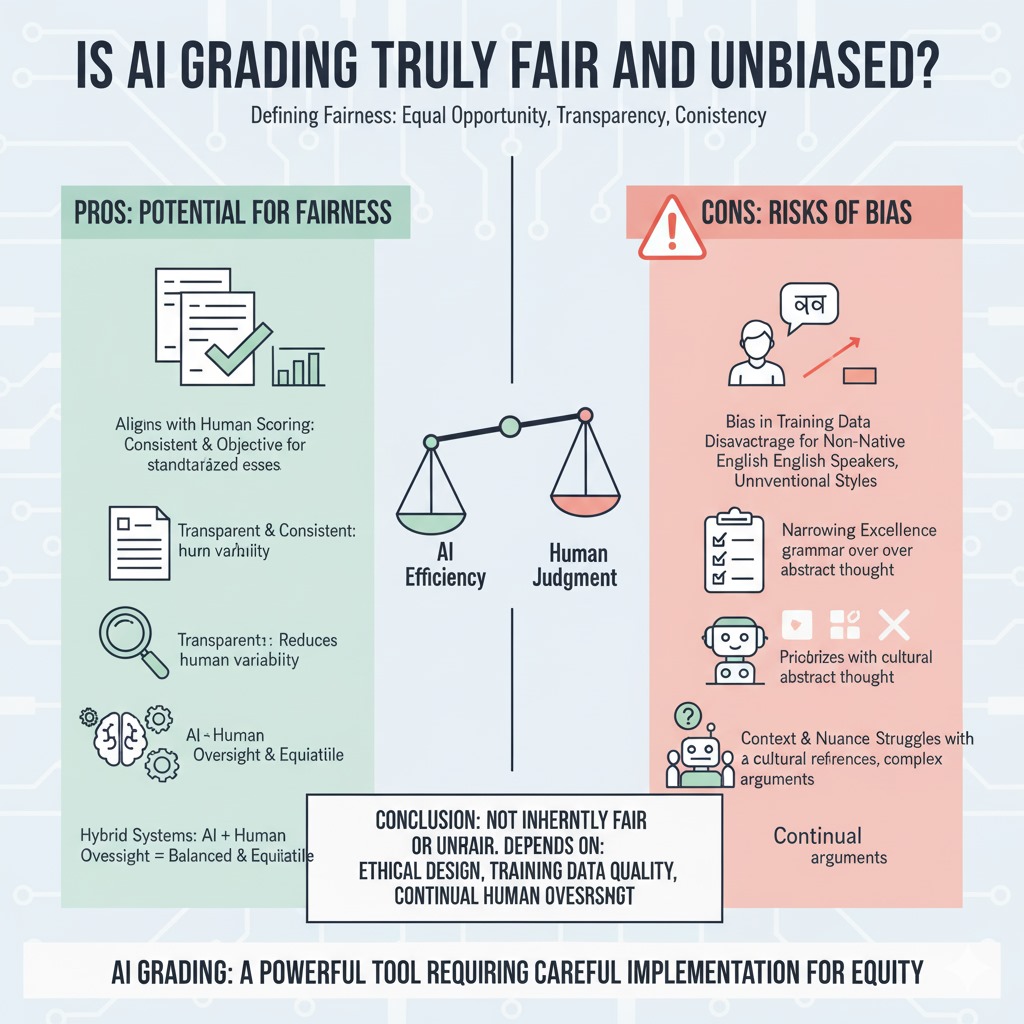

We must first define fairness in assessment before we can respond to the question of whether AI grading is actually fair. Fairness is frequently defined in educational theory as providing every student with an equal chance to demonstrate learning free from institutionalized bias. Fairness in AI also refers to evaluations that are transparent, consistent, and context-sensitive.

The results of research are not entirely consistent. According to certain research, AI graders and human scoring closely match, especially for standardized essays. This implies that fairness may be possible in situations that are predictable and structured.

However, other studies draw attention to prejudices against non-native English speakers, unusual writing styles, or arguments that are culturally specific. These disparities show how context and dataset quality have a significant impact on AI grading fairness.

Critics argue that equity requires more than statistical consistency. True fairness also considers the values embedded in assessment design. If AI grading prioritizes measurable grammar and structure over creativity or nuanced argument, it risks narrowing the definition of academic excellence.

Proponents argue that the best chance of attaining fairness is through hybrid systems, in which humans evaluate the final results after AI provides the initial scoring. Institutions can strike a balance between speed and equity by fusing human judgment with machine efficiency.

In the end, AI grading is neither intrinsically fair nor unjust. How systems are created, taught, and managed determines how equitable they are. The argument centers on how to guarantee AI grading fairness through moral behavior, open design, and ongoing human supervision rather than whether AI should grade.

Read more: Can AI Assessment Lead To Unfair Grades Due To Bias …

8. How to Make AI Grading More Fair and Transparent

Ensuring equity in AI grading requires intentional design, monitoring, and policy model.

1. Use Diverse and Representative Training Data.

Training data should ponder a wide range of student populations, writing styles, and cultural contexts. This reduces the risk of systemic bias and intensify AI grading fairness across diverse classrooms.

2. Implement Human Oversight.

Hybrid models, where AI provides preliminary scores and humans validate results, see that students benefit from both ratio and contextual sensitivity. Management also builds trust among students and faculty.

3. Regular Auditing of AI Systems.

Continuous rating and third-party audits can identify biases, inaccuracies, and unintended consequences. Institutions should treat AI grading as a process requiring regular updates, not a one-time installation.

4. Transparent Communication with Students and Educators.

Explaining how AI scoring works, its limitations, and the role of human review helps build confidence. Students are more likely to accept results when they understand the process behind them.

5. Develop Ethical Guidelines and Policy Frameworks.

Clear policies on data privacy, answerability, and error resolution are essential. Institutions must clarify who is responsible when mistakes occur and how students can appeal results.

By following these practices, universities can move closer to achieving AI grading fairness while maintaining academic integrity and student trust.

Read more: How Can Schools Prevent Algorithmic Bias In AI Grading …

9. The Future of AI in Educational Assessment

In the future, AI’s contribution to grading is probably going to grow. One of the main obstacles to AI grading fairness is the promise of emerging technologies like explainable AI, which promise to make algorithms more transparent and intelligible.

Advanced semantic analysis may be incorporated into future systems, allowing machines to assess creativity, subtlety, and cultural context more accurately. Additionally, adaptive models could modify grading rubrics to fit particular curricula, providing more individualized evaluations without sacrificing consistency.

However, the future of AI grading will likely be crossed rather than fully automated. Human judgment remains faultfinding for assessing complex skills like critical thinking, creativity, and ethical reasoning. The challenge is striking the right balance between machine ratio and human insight.

As policymakers and pedagogue continue to shape the role of AI in classrooms, fairness must remain at the center of innovation. If done thoughtfully, AI has the potential to not only gradient faster but also to support a more equitable education system.

Read more: How Can AI Personalize Learning for Each Student? Top 9 Tips

10. Frequently Asked Questions

1. Can AI completely replace human graders?

No. While AI can handle routine tasks efficiently, human graders remain essential for evaluating complex skills like creativity, ethics, and nuanced reasoning.

2. What types of assignments are best suited for AI grading?

Structured tasks like multiple-choice exams, coding exercises, and formulaic essays are well-suited. Open-ended, creative work still requires human evaluation.

3. How do educators check if AI grading is fair?

By comparing AI scores with human grading samples, auditing results regularly, and ensuring training datasets are diverse and unbiased.

4. What role does bias in training data play?

Training data directly influences outcomes. If the dataset is biased, the AI will reflect and amplify those biases, undermining AI grading fairness.

5. Are AI-graded results legally valid in academic institutions?

Yes, but institutions must ensure compliance with policies on transparency, accountability, and student rights to appeal scores.

6. Do students trust AI grading systems?

Trust varies. Students often question whether AI can understand creativity or nuance. Transparency and hybrid grading models can improve trust.

7. Can AI grading be customized to fit different curricula?

Yes. Many systems allow rubrics to be tailored to specific learning outcomes, improving alignment with course goals.

8. What happens when AI makes mistakes?

Institutions must have review processes and appeal mechanisms in place. Human oversight ensures errors can be corrected.

9. How are universities currently using AI in grading?

Many institutions use AI for large-scale testing, online learning platforms, and preliminary essay scoring, often combined with human review.

10. Will future AI systems solve fairness and bias concerns completely?

Not entirely. While advancements will reduce bias, complete fairness may be impossible. Human oversight will always be necessary.

1 thought on “Is AI Grading Fair and Unbiased? Best Insight 2025”